Global Corp - PySpark Optimization

Case Study

About

This case study shows how a large-scale finance data pipeline was optimized using PySpark and Delta Lake in Microsoft Fabric. It improves performance and reliability when joining tens of millions of transaction records by using optimized joins, schema enforcement, time travel, and table maintenance techniques such as OPTIMIZE and Z-ORDER to support daily financial reporting.

Challenge

-

Performance Issue – The daily finance pipeline took more than two hours to complete, delaying critical aging reports

-

Large Data Volume – The pipeline had to join tens of millions of transaction records with customer master data efficiently

-

Data Reliability Issues – Corrupted data runs reduced trust in reports and required a way to fix historical errors

-

Lack of Visibility – The team did not have clear insight into performance bottlenecks or how to optimize the pipeline

Solution

-

Performance Diagnosis – Used Spark UI to analyze execution plans and identify shuffle-heavy stages causing performance bottlenecks

-

Join Optimization – Re-engineered the PySpark join logic by applying broadcast joins to efficiently join tens of millions of records with smaller dimension data

-

Delta Lake Reliability – Applied schema enforcement and data quality filtering to handle invalid records, used DESCRIBE HISTORY to audit table changes, and leveraged time travel and restore to recover from corrupted data.

-

Table Optimization – Improved performance and storage efficiency by applying OPTIMIZE and Z-ORDER on large Delta tables.

Business impact

-

Optimized Large-Scale Joins – Using broadcast joins removed expensive shuffles by sending the small customer dataset to all executors, enabling efficient joins with tens of millions of transaction records

-

Reliable Data Recovery – The Delta Lake transaction log provided a full history of table changes, allowing time travel queries and RESTORE operations to quickly recover from corrupted data runs.

-

Improved Query Performance – OPTIMIZE reduced the small file problem through bin-packing, while Z-ORDER improved data locality, making queries on large Delta tables significantly faster.

-

Enterprise-Grade Stability – The combination of performance tuning, auditability, and recovery mechanisms resulted in a scalable and trustworthy pipeline for daily financial reporting.

This view shows the initial PySpark join between the large transactions dataset and the customer master table using a standard left join. The execution highlights data skew and performance warnings, indicating expensive shuffles when joining tens of millions of records. This step helps identify the root cause of the long runtime and establishes the baseline performance issue before applying any optimization techniques.

When a broadcast join is used, Spark sends a copy of the small Customer Master table to each executor node. This allows the large Transactions dataset to be joined locally on every executor without shuffling the large data across the network. By eliminating expensive shuffle operations, this approach significantly improves performance when joining tens of millions of records with a much smaller dimension table.After this, the Transactions dataset is repartitioned on CustomerID to evenly distribute data and reduce skew for downstream processing. This combination improves overall performance when working with large data.

The DESCRIBE HISTORY output shows the full change history of the Customer_Master Delta table and confirms that corrupted data was merged into the table during a previous run. The log captures the MERGE operation along with timestamps and version details, providing clear audit evidence of when the issue occurred. This visibility makes it possible to identify the exact version affected by bad data and supports recovery using Delta Lake time travel and restore capabilities.

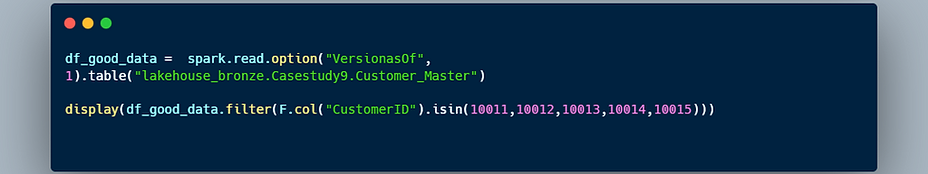

This step uses Delta Lake time travel to read a previous version of the Customer_Master table before the corrupted data was merged. By specifying the VersionAsOf option, the pipeline retrieves a known good version of the data and filters specific customer records for validation. This confirms that Delta Lake allows access to historical table states, making it possible to compare, validate, and recover data when issues occur.

This step restores the Customer_Master Delta table to a previous stable version using Delta Lake’s RESTORE command. By rolling the table back to a known good version identified from the transaction history, the corrupted data introduced by the earlier merge is removed. This demonstrates how Delta Lake enables safe and controlled recovery of production data, ensuring data reliability without manual reprocessing or data reloads.